Docker is a platform that allows developers to containerize and easily ship software. It helps eliminate the overhead of configuring environments to run software by, essentially, shipping the environment along with your code.

This is often explained using the shipping container analogy. Freight companies are responsible for getting shipping containers from A to B. They don’t care what goods you put in your container, but they do care that you gave it to them in the correct format.

Think of your developers as the workers creating software that they will package into a container. What they build might be complex with special libraries, optimizations, or configurations. Once they’re ready to ship their software, it’s time to hand it over to a freight company who knows how to get it from A to B. Fortunately, now that it is in a standardized format DevOps can easily move it into production. They might even automate this process to make it easier in the future.

In this article, we’ll give an example of a business use case for Docker, an overview of how to build Dockerized microservices, employing the use of Amazon’s Elastic Container Service (ECS), and conclude with an overview of the business benefits of using Docker.

A Use Case for Docker and Microservice Architecture

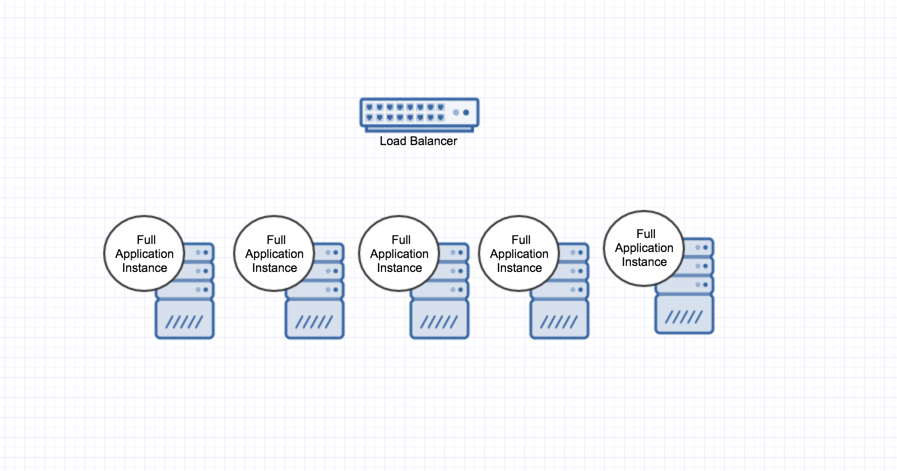

Let’s say your web application has a resource-heavy user registration page. At the beginning of every month it gets flooded with new users. To handle the load, your server infrastructure is running on several large load balanced servers (as seen below). Unfortunately, this amount of processing power is only needed 12 days out of the year. That means your company would be paying for resources that it’s not even using most of the time.

Now, let’s say a critical feature has changed in your registration process: users need to be placed into a secondary reporting system for legal reasons. Currently, your registration code is buried in the rest of your application and moving this fix to production means a total redeploy of the entire system. Developers and infrastructure will be forced to work late this weekend in order to push this update out. The worst part is, this was a relatively small change!

In a Dockerized world, this is far less of an issue. The registration process could be separated from the main application and pushed out to production using a rolling update. You could do this on a Monday and wouldn’t even need to bring the site down. This makes everybody happy because it means software is in production quickly and safely.

To get to this point, developers could start decoupling the registration process by building it out as a REST service. Once the code for the web frontend and the registration service are separated, they are ready to be Dockerized. Developers can package up all the code, libraries, and configurations into Docker Images and hand over to DevOps. A clustering tool like Docker Swarm, Amazon ECS, or Red Hat’s OpenShift would enable DevOps to host the Docker Containers. They could use these systems to scale up when demand is high and scale down when demand is low. This in turn solves our first issue by utilizing resources in a cost effective way.

Solving Scalability and Decoupling Code with Docker and Microservice Architecture

Let’s illustrate setting up this containerized infrastructure with our sample project. This code contains the web frontend and the registration backend. You may notice these are split into two separate repos. That’s fine. In a Service Oriented Architecture, the less these services know about each other, the better.

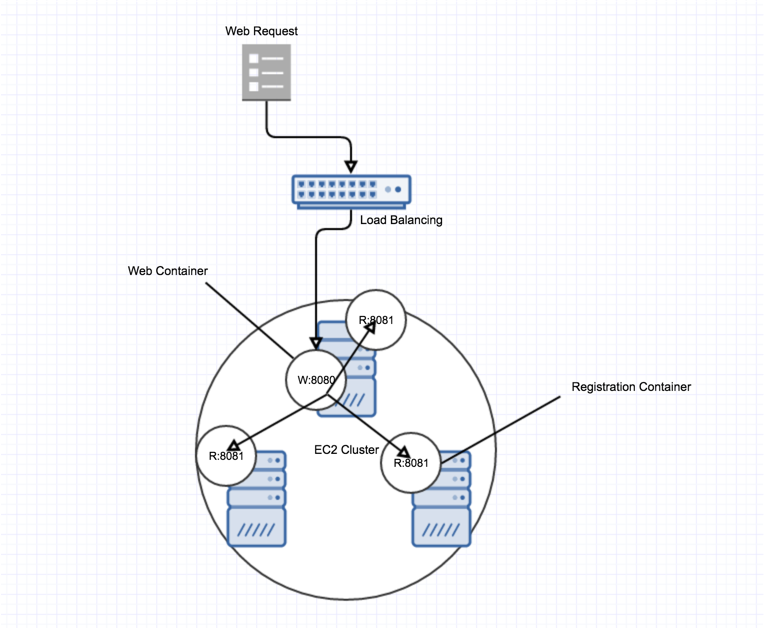

In the diagram below, we can see the web and registration containers living inside an Amazon ECS Cluster. A request for user registration would hit the web frontend, which could then ask any of the registration services for the appropriate response.

Let’s get started by cloning the sample project.

git clone git@github.com:LargePixels/pe-docker-demo.git

Make sure you have Docker and Gradle installed so we can build the project and images.

$ cd pe-web-demo

$ gradle buildDocker

$ cd ../pe-registration-demo

$ gradle buildDocker

You can run the following command to see the images in your Docker Engine.

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

pe-test/pe-registration-docker latest b702b2f835fd 8 seconds ago 195.3 MB

pe-test/pe-web-docker latest b340fee4c9d7 9 minutes ago 195.3 MB

frolvlad/alpine-oraclejdk8 slim ea24082fc934 2 weeks ago 167.1 MB

Notice we now have our two images plus the base image off of which they were built. Just for fun, let’s start up the web app and visit. Take the Docker web image and launch it as a container running on a local virtualized machine.

$ docker run -d -p 8080:8080 pe-test/pe-web-docker:latest

$ echo $DOCKER_HOST

tcp://192.168.99.103:2376

$ firefox 192.168.99.103:8080

We told the hosting machine to let us access the container on port 8080. With the following command, we can now see it running.

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

323df580ea6e pe-test/pe-web-docker:latest “java -Djava.security” 4 minutes ago Up 4 minutes 0.0.0.0:8080->8080/tcp stoic_pike

Using Amazon’s Elastic Container Service

To really see the benefits of these Docker images we need a scalable infrastructure to run them on. For the purposes of this demo, Amazon ECS will do just fine. In order to get your images out to the Amazon cloud, you will need to install the AWS command line interface.

Amazon provides a great wizard to help make this process easier. In it you will do the following

- Create a Docker Repository to host your images

- Upload your images to the cloud (see below)

- Create a cluster of EC2 instances on which to run your images

- Group your images into Services

In order to push a Docker image to the cloud, you will need to login first.

$ aws ecr get-login --region us-east-1

$ <run the output of the previous command>.

The command above generates a “docker login” which points your Docker host to the remote repo. This will grant access to the default AWS registry that every AWS account comes with.

https://.dkr.ecr.region.amazonaws.com

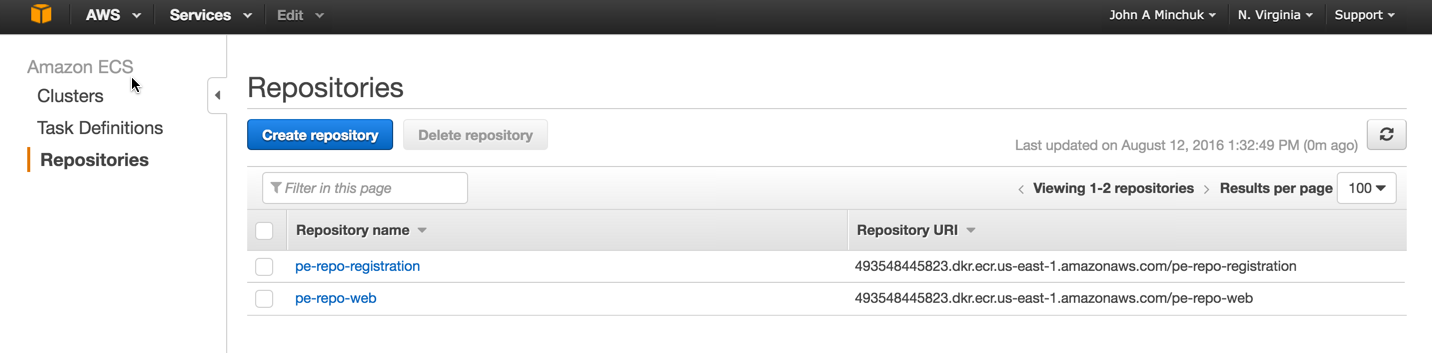

Note that the above registry can hold many repositories. Repositories hold versions of an image. For this project you will need two repositories. One for our web image and one for the registration image.

In order to send an image to a remote repo it needs to be properly tagged. Use the url of your AWS repo like so.

$ docker tag pe-test/pe-registration-docker:latest 493548445823.dkr.ecr.us-east-1.amazonaws.com/pe-repo-registration:latest

$ docker tag pe-test/pe-web-docker:latest 493548445823.dkr.ecr.us-east-1.amazonaws.com/pe-repo-web:latest

Now let’s push them out to AWS.

$ docker push 493548445823.dkr.ecr.us-east-1.amazonaws.com/pe-repo-web:latest

$ docker push 493548445823.dkr.ecr.us-east-1.amazonaws.com/pe-repo-registration:latest

Scaling up ECS with your Docker Containers

In the previous section, you used the wizard to create a Cluster of EC2 instances. We created two repos to hold our images and uploaded them there. Let’s have a look.

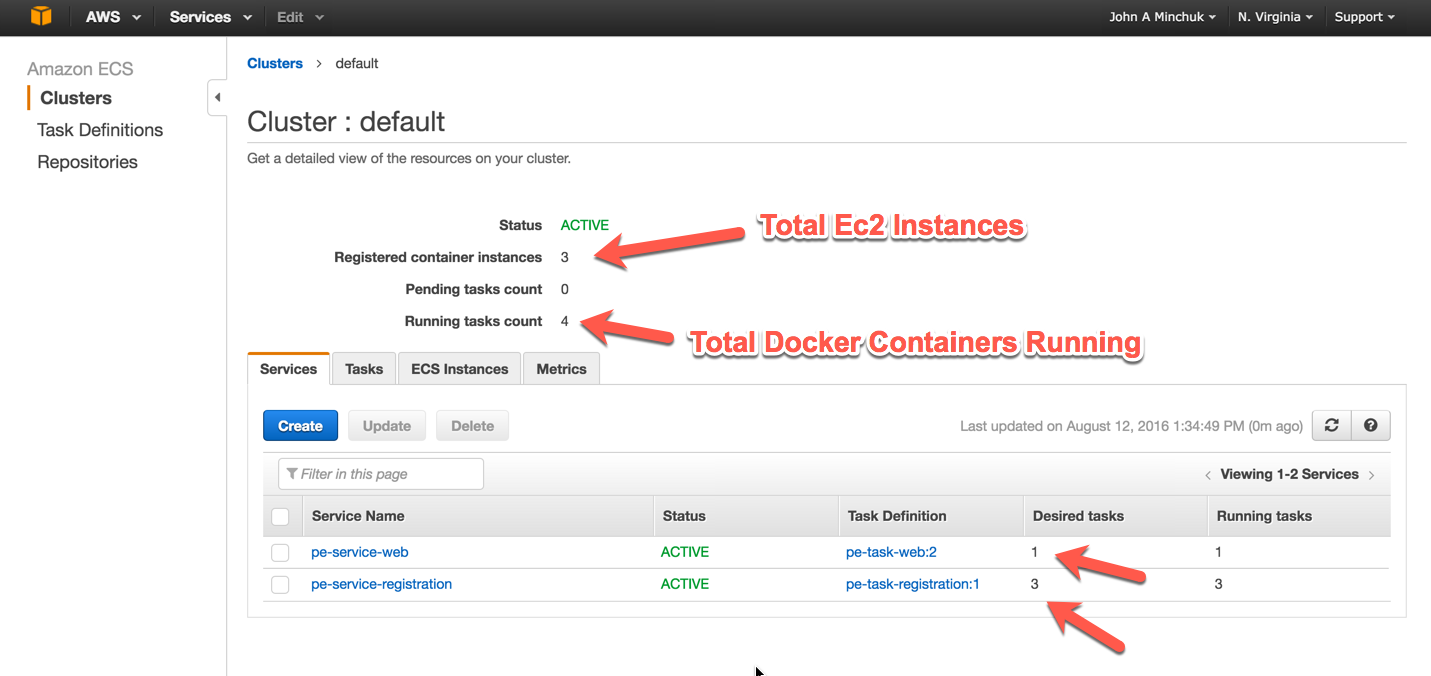

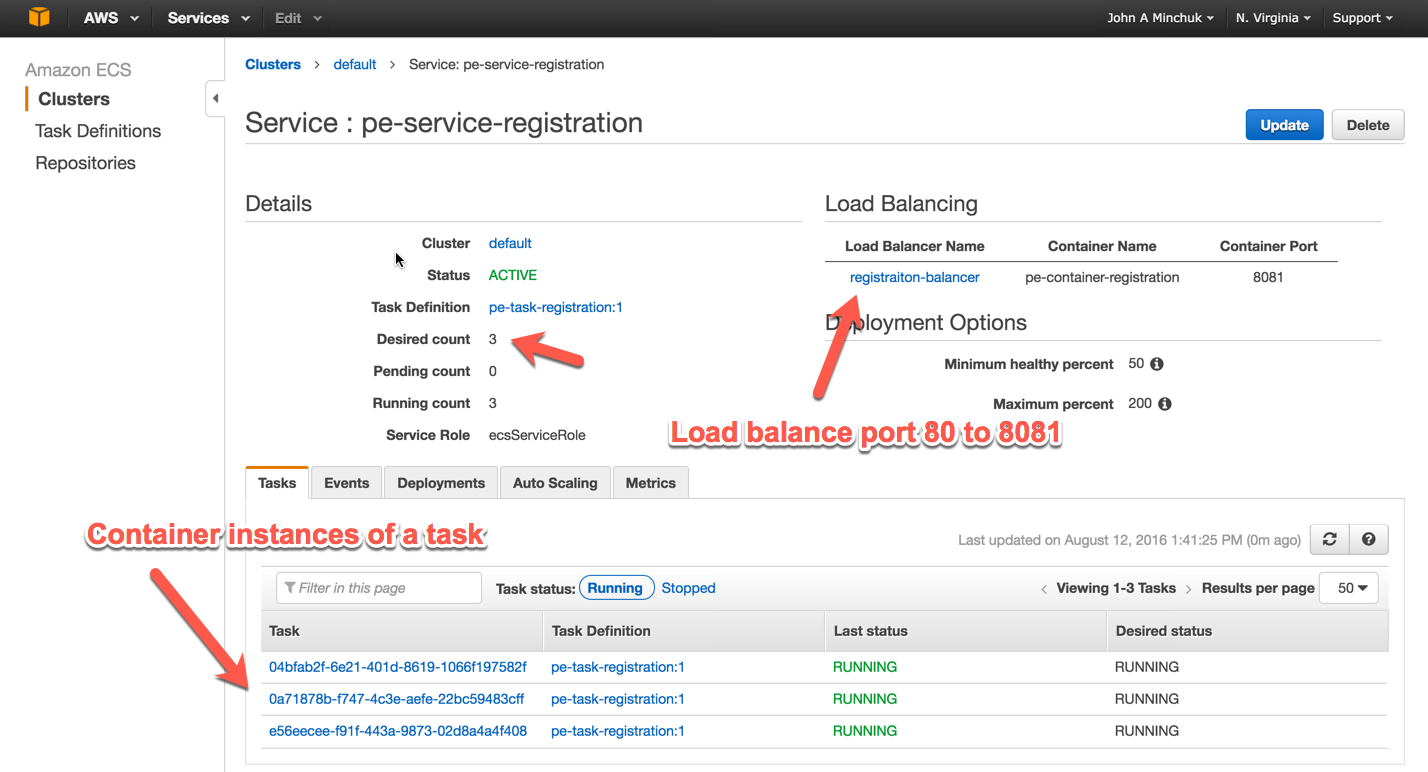

You can also see my cluster which in its completed state has 3 servers, with 4 Docker images running on them (see diagram above).

Also listed above are the Services we configured from the Cluster. Services allow you to group Docker Containers and specify options like how many of them you want, and how you would like to balance traffic between them.

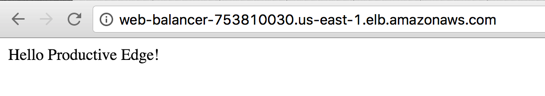

If you configured your Load Balancer properly you can visit it in your browser. Requests will be distributed to the number of active Containers in the Cluster.

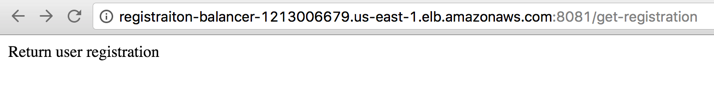

Here’s a manual request to the registration service we built. Recall that this service is running on port 8081 so as not to conflict with the registration service on port 8080. The Load Balancer takes care of hiding all this from us. We can get results from the registration service right over port 80.

Going Forward

This quick overview only scratches the surface of how Docker and Microservice Architecture can be used to make drastic improvements for your teams and products. Here are some of the benefits you can expect to see when adopting this architecture.

- Reduced costs

- Faster development

- Faster and more consistent deployment

- Loosely coupled code and teams

- Easier maintenance and extendability

- More reliable infrastructure

If you’re looking to leverage Docker in a larger environment, then we recommend considering solutions like Spring Netflix and OpenShift. These solutions fit into the larger Docker ecosystem and can make deploying and developing even easier.

For a complimentary consultation, contact us today.